Multivariate Testing vs. A/B Testing: Choosing the Right Strategy for Your Ecommerce Brand

[ SUMMARIZE WITH AI ]

[ AD TO LP AUDIT ]

99% sure you're wasting Meta ad spend.

Are your ads and landing pages rhyming, or creating a disjointed experience losing customers and money?

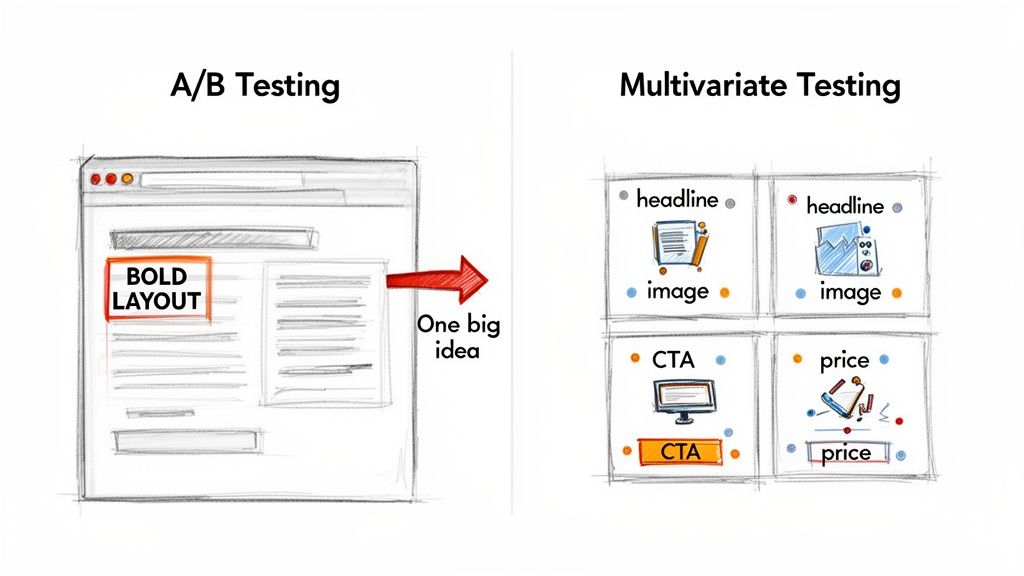

Get free auditFor ecommerce leaders, the choice boils down to this: use A/B testing when you need to validate one big, bold change, like a completely redesigned product page. Use multivariate testing (MVT) when you want to fine-tune multiple smaller elements at once, like a headline, image, and call-to-action, to find the highest-performing combination.

A/B testing gives you a clear 'yes' or 'no' on a major hypothesis, making it ideal for validating large-scale changes or for sites without massive traffic. MVT is a powerful tool for high-traffic stores that want to understand how different elements interact to squeeze every last drop of performance out of a page.

Comparing A/B and Multivariate Testing

Deciding between an A/B test and a multivariate test is a strategic move that shapes your entire conversion optimization roadmap. Getting it wrong means wasted traffic, inconclusive results, and leaving money on the table. One method gives you fast, directional answers, while the other uncovers deep, nuanced insights into how your customers actually behave.

For fast-growing DTC brands, testing is what connects your ad spend to your on-site experience. It's how you prove that the winning message on TikTok actually converts on your landing page. This is critical because a disconnect here costs you. An early study on ecommerce behavior found that 87% of shoppers abandon their carts, often because the ad's promise didn't match the site's reality.

This is where testing proves its worth. According to the Nielsen Norman Group, A/B testing is ideal for radical, single changes. If you're interested in how testing has evolved, you can explore more on testing strategies here.

Core Differences at a Glance

The biggest difference comes down to scope and traffic requirements. Think of an A/B test as a duel: Version A vs. Version B. A multivariate test is a complex tournament, where you're testing multiple element variations in different combinations to find the single most effective formula.

This table cuts through the noise and lays out the practical differences to help you decide which approach makes sense for your current goals.

Comparing A/B and Multivariate Testing

| Criterion | A/B Testing (Split Testing) | Multivariate Testing (MVT) |

|---|---|---|

| Primary Goal | To determine which of two or more distinct versions of a page performs better. | To identify which combination of multiple page elements performs best. |

| Use Case | Ideal for radical redesigns, new feature validation, or significant UX changes. | Perfect for optimizing high-traffic pages by refining existing elements. |

| Traffic Needs | Lower. Effective for sites with moderate traffic, as it's split between fewer versions. | Higher. Requires substantial traffic to achieve statistical significance for each combination. |

| Key Insight | Provides a clear "winner" between different concepts. Answers "Which one is better?" | Reveals the impact of individual elements and their interactions. Answers "What combination is best?" |

Ultimately, A/B testing gives you a clear winner between whole concepts, while MVT tells you which specific ingredients create the best recipe for conversions. Your choice depends entirely on the question you're trying to answer and the traffic you have to work with.

How A/B Testing Drives High-Impact Wins

When you need a clear, decisive answer on a major change, A/B testing is your best tool. It’s designed to validate one big idea at a time by pitting a new version directly against the old one. This gives you a simple, straightforward verdict: did it work or not?

Think about foundational shifts to your user experience: a complete product detail page (PDP) overhaul, a new pricing strategy, or an entirely different checkout flow. These aren't minor tweaks. A/B testing shines here because it isolates the impact of that single, bold change, giving you a clean win or loss without the noise of multiple variables.

A Real-World Shopify Plus Scenario

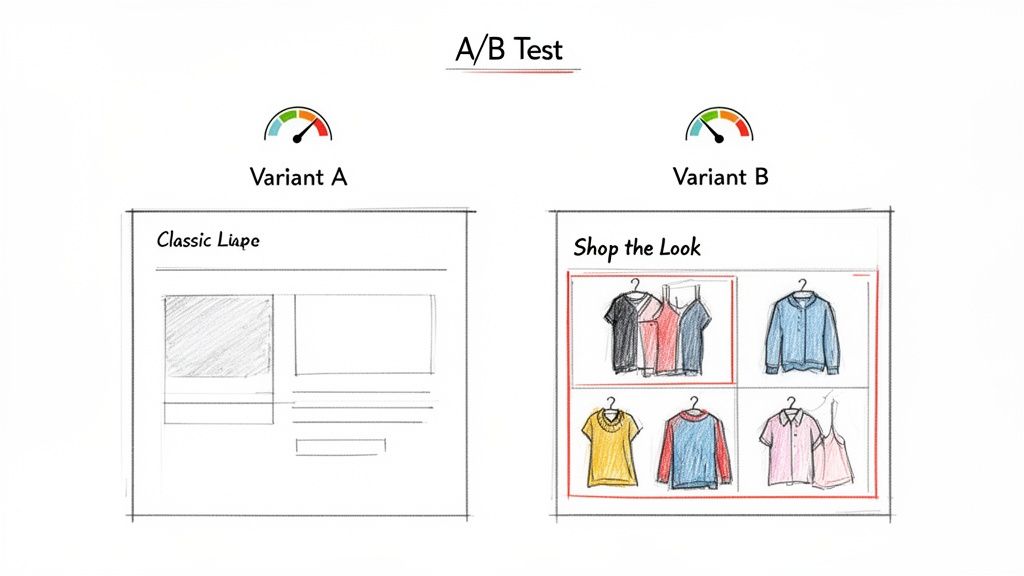

Imagine a Shopify Plus apparel brand. Their current PDP is a standard grid of product photos, a description, and an "Add to Cart" button. It gets the job done, but the team has a hunch: a more editorial, content-first approach could boost Average Order Value (AOV) by inspiring customers to buy a whole look.

Their big idea is a "Shop the Look" feature. This new PDP (Variant B) scraps the standard grid for a lifestyle photo of a model wearing a complete outfit. Each item in the photo is clickable, allowing customers to add pieces to their cart right from the image.

Here's how the A/B test is set up:

- Control (Version A): The original, classic PDP layout.

- Variant (Version B): The new "Shop the Look" PDP.

- Primary Metric: Average Order Value (AOV).

- Secondary Metric: Conversion Rate (to ensure the new design doesn't accidentally kill sales).

Traffic gets split 50/50 between the two pages. After running the test for three weeks to cover multiple buying cycles, the results show Variant B lifted AOV by 18% without a statistically significant drop in the overall conversion rate. The verdict is crystal clear: The "Shop the Look" concept is a home run.

An A/B test gives you a definitive green or red light on a major strategic change. It’s the fastest way to validate whether a big investment in design or development will actually pay off.

When to Prioritize A/B Testing

This one-on-one comparison is especially powerful for brands with less than 50,000 monthly visitors. Because you’re only splitting traffic between two (or maybe three) versions, the statistical requirements are much lower than multivariate testing. This means you can get reliable results much faster, even with moderate traffic.

You should reach for A/B testing when you want to:

- Test Radical Redesigns: Compare a completely new homepage, PDP, or navigation structure against the current one.

- Validate Pricing and Offer Strategies: Find out if "20% off" works better than "Buy One, Get One 50% Off."

- Overhaul Checkout Flows: See if a single-page checkout converts better than a multi-step process.

- Measure New Feature Impact: See if adding a major feature like a visual search tool or a subscription option actually moves the needle.

Even small design changes can have an outsized impact on performance.

The main downside of A/B testing is that it can't tell you why one version won. In our "Shop the Look" example, we know the new page performed better, but was it because of the lifestyle image? The interactive hotspots? The new headline? We don’t know. This is exactly where multivariate testing comes in; it’s built to dissect that "why" by analyzing how all the smaller elements work together.

Using Multivariate Testing for Deeper Insights

If A/B testing tells you what works, multivariate testing (MVT) tells you why. Think of it less as a simple verdict and more as a strategic deep-dive. MVT is all about dissecting how individual elements on a page perform and, more importantly, how they perform together. This is where you find the secret sauce for true personalization and those hard-won incremental gains on your most important pages.

Typically, you’ll bring in MVT after an A/B test has already confirmed a winning layout. From there, you can start refining the interplay between headlines, images, calls-to-action, and offers. The question evolves from "Which page is better?" to "Which combination of elements is best for this specific audience?" This is essential for creating a smooth journey from ad to site, making sure the message that caught a customer's eye on Instagram is the same one that greets them on the product page.

Uncovering Interaction Effects

The real magic of MVT is its ability to spot interaction effects. This is what happens when one element’s performance changes because of another element on the page. An A/B test simply can't see this; it just lumps all the changes in a variant together and gives you a single result.

Let's imagine a Shopify Plus apparel brand trying to personalize a product page for a high-intent segment coming from a specific Facebook ad. They could run an MVT with a few moving parts:

- Two Headlines: "Luxury Feel, Lasting Quality" vs. "Engineered for Everyday Wear"

- Three Hero Images: A standard product shot, a lifestyle image, and a user-generated content (UGC) photo.

- Two CTA Buttons: "Add to Cart" vs. "Buy Now"

This setup creates 12 different combinations (2 x 3 x 2 = 12). An A/B test might tell you the "Luxury Feel" page won, but MVT could uncover a game-changing detail: the "Luxury Feel" headline only crushes it when paired with the lifestyle image. That specific combination might boost conversions by 15%. But that same headline with a UGC photo? It might actually hurt sales. That’s an interaction effect, and it’s the kind of granular insight that fuels powerful personalization.

MVT isn't just about finding the best headline or the best image. It's about finding the best headline for the best image, creating a synergy that drives more conversions than just optimizing each part on its own.

The High Traffic Requirement

This level of detail comes at a price: traffic. Because you’re splitting your audience across so many different combinations, MVT needs a huge number of visitors to get a statistically reliable result for each one. This is why it’s almost exclusively used by high-revenue brands with tons of consistent traffic to pages like the homepage, key collection pages, or best-selling PDPs.

For instance, picture a $50M Shopify brand running a multivariate test. They want to test four elements: a headline (2 versions), a hero image (2), the CTA button text (3), and the price display (2). That’s 24 unique combinations. To hit 95% statistical confidence, each combo might need around 500 conversions. Suddenly, the test requires a total of 12,000+ conversions, a massive jump from the 1,000 you might need for a simple A/B test. This is why MVT is a tool for brands with mature, high-volume traffic. In fact, you can see from industry benchmarks that this method helps identify 35% more winning combinations precisely because it accounts for these crucial synergies.

At CONVERTIBLES, this is how we connect the dots between paid ads and the on-site experience. By personalizing PDPs for high-intent traffic segments, we’ve seen this strategy lift profit per visitor by 18-32%. It’s the engine that powers true, segment-specific optimization and turns broad insights into measurable profit.

Choosing the Right Test for Your Goal

Picking between A/B and multivariate testing is a strategic decision. Get it right, and you unlock valuable insights. Get it wrong, and you'll waste traffic, get muddy results, and leave money on the table. The decision hinges on your specific goals, your website traffic, and the kind of hypothesis you’re trying to prove.

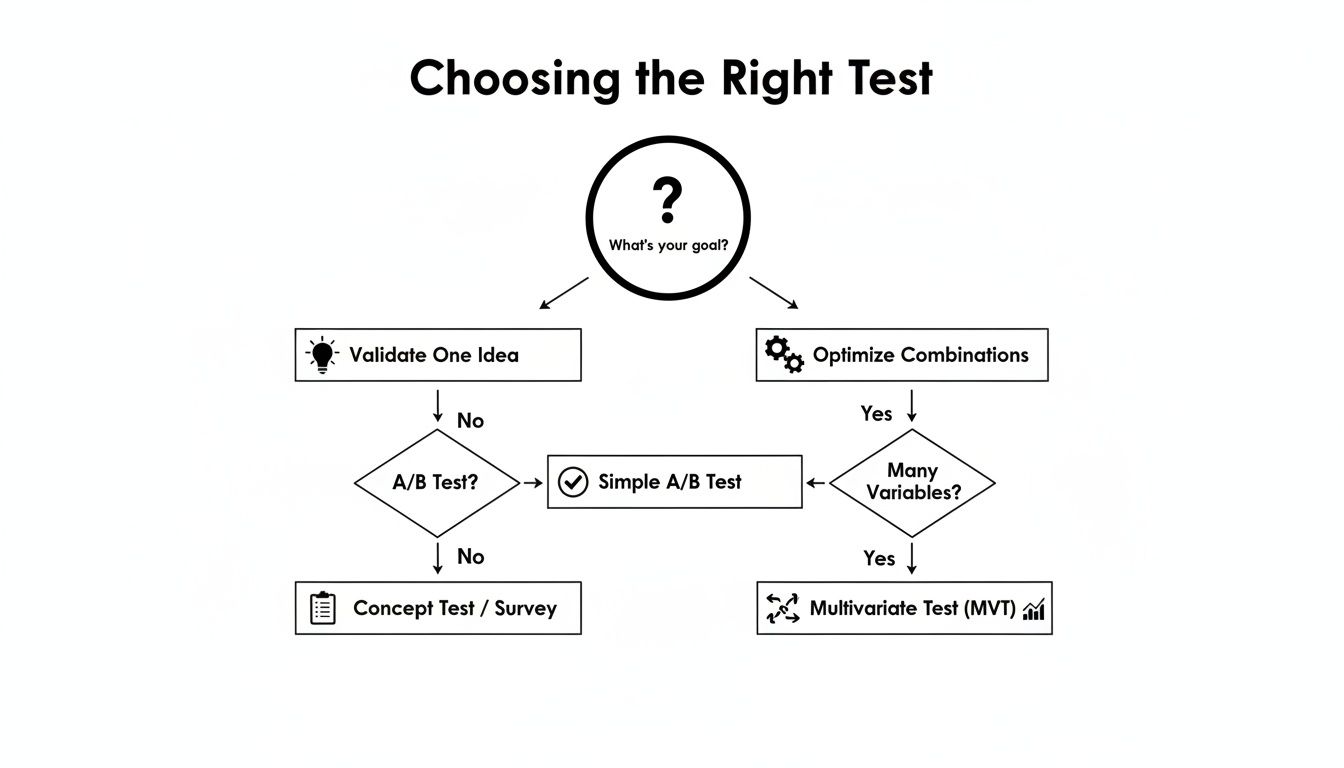

To make the right call, you need to ask a few honest questions first. Are you testing a bold, radical redesign or just tweaking a few elements on an already successful page? Are you trying to validate one big idea, or do you need to understand how multiple small changes work together? The answers will point you directly to the right testing method.

This decision tree helps simplify that initial choice. At its core, are you validating a single concept or optimizing the combination of elements?

The flowchart makes the starting point clear. If your goal is to prove a single, bold hypothesis, an A/B test is your best tool. If you’re trying to refine the interactions between multiple elements, multivariate testing is the way to go.

Traffic Volume and Hypothesis Scope

The first gate you must pass through is always traffic. Do you have enough visitors to run a valid test? If your page gets less than 50,000 monthly visitors, A/B testing is almost always the smarter, more practical option. It needs far less traffic to reach statistical significance because you're only splitting visitors between a handful of variations.

With traffic sorted, think about the scope of your hypothesis.

- Radical Redesigns: If you're testing a completely new homepage layout or a fundamentally different checkout flow, you need an A/B test. MVT would be overkill here and wouldn't give you a clear "yes" or "no" on the overall concept.

- Iterative Refinements: If you want to fine-tune an existing, high-performing page by testing a new headline, hero image, and CTA button all at once, MVT is perfect. It’s designed for exactly this kind of granular optimization.

The Hybrid Approach: A Winning Strategy

You don't always have to pick one and stick with it. In fact, most mature optimization programs use a hybrid approach. Start by running an A/B test to validate a major layout change. Once you have a clear winner, follow it up with a multivariate test on that winning page to optimize the individual components.

Start with A/B testing to find your winning forests, then use MVT to find the tallest trees within them. This methodical approach ensures your big bets are validated before you invest traffic in granular refinement.

This layered strategy gives you the best of both worlds, maximizing insights while respecting your traffic limits. The A/B test gives you the big, directional win, and the MVT delivers the detailed insights you need to continuously improve your ecommerce conversion rate. And remember, sometimes the best insights come from other methods entirely; qualitative feedback from something like usability testing of a website can be incredibly powerful.

Ultimately, choosing the right test comes down to aligning your method with your goal and resources. A high-traffic product detail page is a fantastic candidate for MVT, while a lower-traffic order confirmation page is much better suited for a simple, focused A/B test.

Common Testing Mistakes and How to Fix Them

Even the sharpest testing programs can get derailed by simple, avoidable mistakes. These slip-ups poison your results, burn through valuable traffic, waste developer time, and can kill your team's confidence in the entire conversion optimization program.

For brands that rely on a high testing velocity to stay ahead, these errors are silent ROI killers. Often, the only thing separating a winning program from a stalled one is the discipline to avoid these common traps.

Common A/B Testing Pitfalls

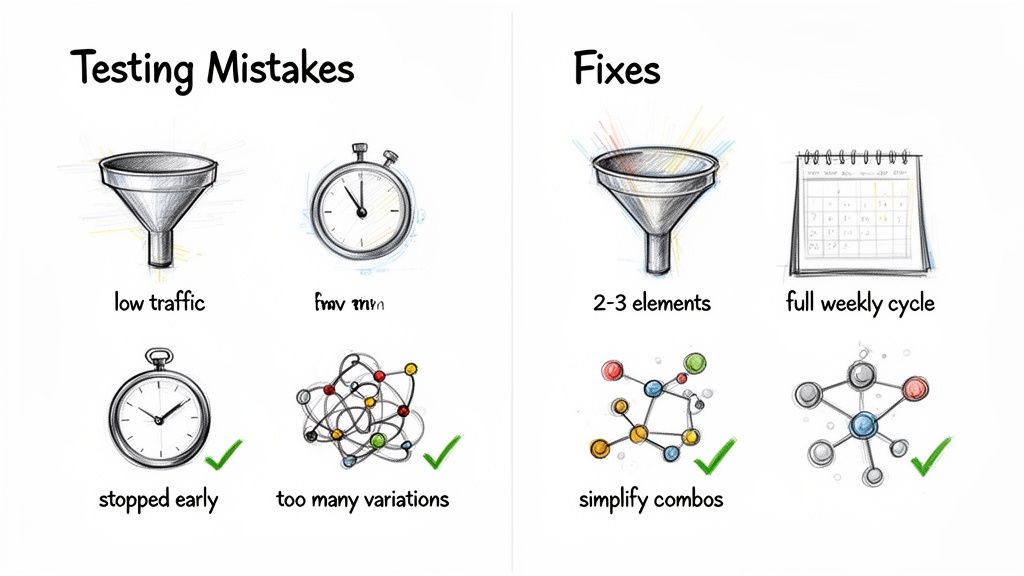

A/B testing looks easy, but that simplicity makes it easy to get wrong. Most errors come down to impatience and a shaky grasp of the stats, leading to big decisions based on random noise instead of a real signal.

-

Testing Trivial Changes: This is the most common mistake. Teams spend weeks testing tiny tweaks, like changing a button from one shade of blue to another. Unless you have Amazon-level traffic, these changes almost never produce a statistically significant result.

The Fix: Go for bigger swings. Your A/B tests should challenge a core assumption about your customers. Test a completely new value proposition, a radically different checkout flow, or an entirely new pricing model. -

Calling a Test Too Soon: It's tempting to declare a winner when a variant shoots up to 95% confidence after a few days. Don't fall for it. This is a classic statistical trap where early, random fluctuations look like a sure thing before the numbers have a chance to even out.

The Fix: Let every test run for at least two full business cycles, which usually means two weeks. This simple rule ensures you capture the behavior of different types of shoppers, from weekend browsers to weekday commuters and those who only buy around payday. -

Ignoring Statistical Power: Kicking off a test without knowing if you have enough traffic to actually detect a change is just gambling. If your baseline conversion rate is 2% and you’re hoping to see a 10% lift, you need a certain amount of traffic to get a reliable answer.

The Fix: Use a sample size calculator before you build anything. Decide on the minimum lift you care about, and make sure your test can reach at least 80% statistical power in a reasonable amount of time. If it can't, you need to either aim for a bigger change or rethink the test.

Common Multivariate Testing Pitfalls

Multivariate testing is a whole different beast. It's far more complex, and the mistakes are often more costly, sometimes leading to months of wasted traffic and data that tells you absolutely nothing. The main challenge is balancing creative ambition with statistical reality.

The whole point of an MVT is to find the perfect combination, but when you add too many elements, you create a statistical nightmare. Your traffic gets spread so thin that no single variation can ever reach significance. You're left with a mountain of data but zero real answers.

The biggest error, by far, is trying to do too much at once.

-

Creating Too Many Combinations: Testing four headlines, three images, three calls-to-action, and two different offers creates 72 different combinations. Unless you're a top 100 retailer, you simply don't have the traffic to make that work.

The Fix: Be ruthless. Keep your MVTs focused on 2-3 critical elements with only 2-3 variations each. This keeps the total number of combinations under 12, which is a much more realistic target for most Shopify Plus stores. -

Missing the Interaction Effects: If you just look at which individual element "won" (e.g., the best headline), you've missed the entire reason for running an MVT in the first place. The gold is in seeing how the pieces work together.

The Fix: When you analyze the results, ignore the individual element performance and focus on the winning combinations. A good testing platform will show you that "Headline A" paired with "Image B" was the real winner, even if another headline performed better on average. This is where you find the insights that lead to real breakthroughs.

Building Your CRO and Personalization Tech Stack

A sophisticated testing program is built on more than just good ideas; it runs on the right technology. Building an effective tech stack isn't about collecting shiny new tools. It's about creating a cohesive ecosystem where every piece works together to create better customer experiences and, ultimately, drive more profit.

The goal is to create a seamless feedback loop. Data from your paid ad campaigns should directly inform what you test on your site. The results from those tests should then feed right back into your ad creative, targeting, and offer strategy. This creates a powerful cycle of continuous improvement that just keeps getting smarter.

Core Components of a Modern Stack

For a Shopify Plus brand running tests at scale, the stack usually breaks down into a few key areas. Each one has specialized tools designed for specific jobs, and mixing and matching the right platforms is how you build a program that can handle simple A/B tests and complex multivariate experiments with ease.

-

Test Execution and Personalization: This is the engine of your whole operation. Tools like Intelligems are perfect for dialing in your offers, shipping thresholds, and prices, some of the highest-impact tests you can run. For broader layout and UX testing, platforms like Optimizely or VWO provide the robust features needed for complex A/B and multivariate tests on everything from your homepage to the PDP.

-

Analysis and Planning: Raw data from a testing tool isn't enough. To understand what’s working and plan your next move, you need a dedicated analysis layer. Our proprietary tool, TestBuddy, gives our clients real-time visibility into test performance. It tracks progress against goals and lays out a clear roadmap for what to test next, closing the gap between just running tests and building a long-term, compounding optimization strategy.

Connecting the Dots for Maximum Impact

A disconnected tech stack creates data silos and missed opportunities. The real magic happens when your tools talk to each other. For example, your customer data platform (CDP) should pass audience segments directly into your testing platform. This lets you personalize the site experience for high-value customers or first-time visitors who clicked through from a specific ad campaign.

An effective tech stack isn't a collection of individual tools, but an integrated system. It's the difference between running isolated tests and executing a cohesive, data-driven personalization program that lifts your entire business.

As you build out your stack, it's critical to track both primary and secondary metrics. A test might boost your primary goal, like conversion rate, but if it also tanks your page load speed (a key secondary metric), you might be creating more problems than you solve. Monitoring both ensures your wins are real and sustainable. For a deeper look into this balanced approach, check out our guide on Shopify conversion rate optimization.

Ultimately, your technology needs to support your strategy, not the other way around. By investing in a well-integrated stack, you create the foundation needed to move beyond generic CRO and into the world of true, segment-specific personalization.

Your Next Step: From Testing to Personalization

The multivariate vs. A/B testing debate isn't about picking a "winner." It's about having the right tool for the right job, for a specific page, a specific goal, and a specific amount of traffic.

Both testing methods are just stepping stones to a much bigger, more profitable strategy: personalization. The days of one-size-fits-all conversion optimization are behind us. The real money is in creating experiences tailored to different segments of your audience.

Transitioning from running one-off tests to building a true personalization program might sound intimidating, but it's really just about being disciplined and strategic. You stop throwing random ideas at the wall and start building a system where every experiment teaches you something, compounding your profit per visitor over time.

That's what true website personalization is all about: delivering the right experience to the right person at the right moment. If you want to dive deeper, we have a whole guide on what website personalization truly means.

A Simple Plan to Get Started

You don't need a huge team or a dozen new tools to move from isolated tests to a structured personalization roadmap. You just need a clear, actionable plan. Here's the three-step framework we use to build programs that deliver consistent, measurable results.

-

Audit Your Traffic and Find Opportunities. Before you think about a test, get into your analytics. Find your high-traffic, high-intent pages that can actually support an MVT. For pages with less traffic, flag them for simpler, high-impact A/B tests. This simple audit saves you from wasting time and traffic on tests that were doomed from the start.

-

Define Your Hypothesis and Pick Your Method. What are you trying to prove? If you want to test a completely new landing page design against the old one, that’s a textbook A/B test. If you're trying to fine-tune the headline, image, and CTA on a product page that already performs well, that's a perfect job for MVT. A strong hypothesis makes the choice of method obvious.

-

Build a Cumulative Testing Roadmap. One-off tests give you one-off wins. A roadmap creates lasting value. Use a tool like TestBuddy to plan your experiments in a logical sequence. The key is to make your learning cumulative, where the insights from one test directly fuel the hypothesis for the next one.

The goal isn't just to find a single 'winner.' It's to build an institutional memory of what works for different audience segments, turning your website into an asset that gets smarter and more profitable with every test you run.

This methodical approach takes you beyond the simple A/B vs. multivariate choice and into a system of continuous, compounding growth. Your next step is to choose one high-traffic page, form one clear hypothesis, and launch one test. That's how a real optimization program begins.

At CONVERTIBLES, we build compounding personalization programs for Shopify Plus brands ready to scale profit per visitor. See how we do it.